Eric Archer sent in a question on Bayesian logistic regression. I don't really have much to say on this one, but since it's about dolphins I'll give it a try anyway. First Eric's question, then my reply.

Results matching “R”

Our new book on regression and multilevel models is written using R and Bugs. But we'd also like, in an appendix, to quickly show how to fit multilevel models using other software, including Stata, Sas, Spss, MLWin, and HLM (and others?). We'd really appreciate your help in getting sample code for these packages.

We've set up five simple examples. I'll give them below, along with the calls in R that would be used to fit the models. We're looking for the comparable commands in the other languages. We don't actually need anyone to fit the models, just to give the code. This should be helpful for students who will be learning from our book using other software platforms.

In a comment here, Aleks points us to Gary C. Ramseyer's Archives of Statistics Fun. In addition to various amusing items, he has the following fun-looking probability demo. For over a decade now, I've been collecting class-participation demonstrations for probability and statistics, and it's not every day or even every week that I hear of a new one. Here it is:

In a comment to this entry, Antony Unwin writes,

It is great to see someone emphasising the use of graphics for exploration as well as for presentation, but if you want to do EDA you need interactive graphics. It's a mystery to me why more people do not use them. Perhaps it's because you have to have fast and flexible software, perhaps it's because of the subjective component in exploratory work, perhaps it's because using interactive graphics is fun as well as productive and statisticians are serious people. I'd be grateful for explanations.I'm in a good position to answer this question, since I probably should be using iteractive graphics but I actually don't. Why not? I don't know how to, and I guess I never was forced to learn. But I'm teaching a seminar course on statistical graphics this semester, so this would be a great time for me (and my students to learn).

Resources for getting started with dynamic graphics? (preferably in R)

The class will be structured as a student presentation each week. So, one week I'd like a pair of students to present on dynamic graphics. During the week before, they'd learn a dynamic graphics system, then during the class they'd do a demo, then there'd be some homework for all the class for next week.

Any suggestions on what they should do? Ideally it should be in R (since that's what we're using for the course). Antony Unwin suggested iPlots in R and Martin Theus's package Mondrian. The tricky thing is to get a student ready to do a presentation on this, given that I can't already do it myself. The student would need a good example, not just a link to the package.

26-Jan Sanders Korenman, Professor of Public Affairs, Baruch. “Improvements in Health among Black Infants in Washington DC.” (with Danielle Ferry)

2-Feb Kristin Mammen, Assistant Professor of Economics, Barnard. “"Fathers' Time Investments in Children: Do Sons Get More?"

9-Feb Sally Findley, Clinical Professor of Population and Family Health, Mailman School of Public Health, CU. “Cycles of Vulnerability in Mali: The interplay of migration with seasonal fluctuations.”

16-Feb FFWG: Jeanne Brooks-Gunn, Virginia and Leonard Marx Professor of Child Development and Education at Teachers College, CU. “Child Care at Three: Preliminary Analyses of Fragile Families.”

2-Mar FFWG: Brad Wilcox, Assistant Professor of Sociology, University of Virginia. Visiting Scholar, CRCW, Princeton University. “Domesticating Men: Religion, Norms, & Relationship Quality Among Fragile Families”

9-Mar Pierre Chiappori, E. Rowan and Barbara Steinschneider Professor of Economics, CU. “Birth Control and Female Empowerment: An Equilibrium Analysis.” NOTE: this seminar will begin at 12:30.

**12:30

16-Mar FFWG: Mary Clare Lennon, Associate Professor of Clinical Sociomedical Sciences, Mailman School of Public Health, CU. Visiting Scholar, CRCW, Princeton University. “Trajectories of Childhood Poverty: Tools for Analyzing Duration, Timing, and Sequencing Effects.”

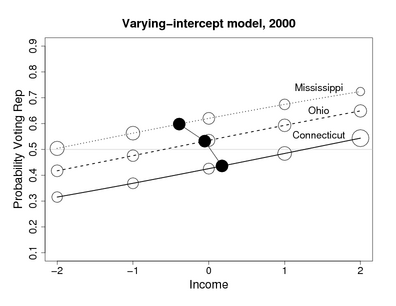

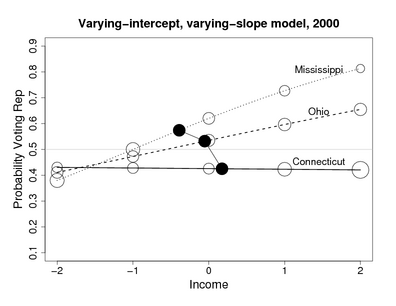

23-Mar Andrew Gelman, Professor of Statistics and Political Science, CU. “Rich State, Poor State, Red State, Blue State: What’s the Matter with Connecticut? A Demonstration of Multilevel Modeling.”

13-Apr Howard Bloom, Chief Research Scientist, MDRC. “Using a Regression Discontinuity Design to Measure the Impacts of Reading First.”

20-Apr FFWG: Barbara Heyns, Professor of Sociology, NYU. Visiting Scholar, CRCW, Princeton University “The Mandarins of Childhood.”

27-Apr Leanna Stiefel, Professor of Economics, Wagner School, NYU. “Can Public Schools Close the Race Gap? Probing the Evidence in a Large Urban School District.”

4-May FFWG: Cay Bradley, School of Social Policy & Practice, University of Pennsylvania. Title: TBA

11-May Jane Waldfogel, Professor of Social Work and Public Affairs, CUSSW. "What Children Need."

18-May FFWG: Chris Paxson, Professor of Economics and Public Affairs, Princeton. “Income and Child Development.”

I think I noticed this because I've been thinking recently about crime and punishment . . . anyway, Gary Wills in this article in the New York Review of Books makes a basic statistical error. Wills writes:

In the most recent year for which figures are available, these are the numbers for firearms homicides:Ireland 54

Japan 83

Sweden 183

Great Britain 197

Australia 334

Canada 1,034

United States 30,419

But, as we always tell our students, what about the denominator? Ireland only has 4 million people (it had more in the 1800s, incidentally). Yeah, 30,000/(300 million) is still greater than 54/(4 million), but still . . . this is basic stuff. I'm not trying to slam Wills here--numbers are not his job--but shouldn't the copy editor catch something like that? Flipping it around, if a scientist had written an article for the magazine and had messed up on grammar, I assume the copy editor would've fixed it.

The problem, I guess, is that there are a lot more people qualified to be grammar-copy-editors than to be statistics-copy-editors. But still, I think that magazines would do well to hire such people.

P.S. I also noticed the Wills article linked here, although they didn't seem to notice the table.

Gabor Grothendieck forwarded the following article by Derek Lowe from Medical Progress Today:

FDA Shows Interest in 18th Century Presbyterian Minister Bayesian statistics may help improve drug developmentNot many ideas of 18th-century Presbyterian ministers attract the interest of the pharmaceutical industry. But the works of Rev. Thomas Bayes have improved greatly with age. . . . For decades, no one heard very much about Bayesian statistics at all. One reason for this was they're much more computationally demanding, which was a real handicap until fairly recently. . . But things are changing. . . . Bayesian and standard "frequentist" statistics are in many ways mirror images of each other, and there are mistakes to be made each way. . . . Bayesian statistics, though, don't address the likelihood that your observed results might have come out by random chance, but rather give you a likelihood of whether your initial hypothesis is true. . . . As far as I know, no pharma company has yet taken a fully Bayesian clinical package to the FDA for a drug approval. There have been a few dose-finding trials in the cancer area, and Pfizer's research arm in England ran a large trial of a novel stroke therapy under Bayesian protocols. . .

It's an interesting article in that it's presenting an pharmaceutical-industry perspective of something that I usually think of from an academic direction. I suspect that there's more Bayesian stuff going on in pharmaceuticals than people realize, though: I'd be curious what Amy Racine-Poon or Don Berry or Don Rubin could add to this article. For example, Bayesian data analysis has been used in toxicology for awhile.

Also, as I discussed in response to another recent news article about Bayesian inference, I think the differences between Bayesian and other statistical methods can be overstated. In particular, so-called frequentist methods still require "esitmates" and "predictions" which can (and often are) obtained using Bayesian inference. Also, I don't think it's quite right to say that Bayesian methods "give you a likelihood of whether your initial hypothesis is true." It would be more accurate to say that they allow you to express your unceratainty probabilistically, for example, giving some distribution of the effectiveness of a new drug, as compared to an existing treatment. The idea of a point "hypothesis" is, I think, a holdover from classical statistics that is a hindrance, not a help, in Bayesian inference. Finally, the article has a bit of discussion about where the prior comes from. In many many examples, the prior distribution is estimated from data using hierarchical modeling. There's not any need in this framework to specify a numerical prior distribution in the way described in the article.

In conclusion, Lowe's article gives an interesting look at Bayesian inference from another perspective, and also reveals that some of the recent (i.e., since 1980) developments in Bayesian data analysis still have not trickled through to the practitioners. I think that hierarchical modeling is much more powerful than the traditional "hypothesis, prior distribution, posterior distribution" approach to Bayesian statistics.

In a comment on this entry, Jim Lebeau links to Scigen, a site by some MIT grad students describing a computer program they wrote to generate context-free simulacra of scientific papers that they submitted to a well-known fake scientific conference called SCI. (As far as I can tell, SCI and similar conferences are scams that make money off of conference fees (and maybe hotel reservations?).) SCI is well-known in that lots of people get their spam "invitations."

Anyway, the Scigen folks went to the "conference" and returned with this amusing report. Basically, the organizers were shifty and the conference attenders were clueless. It gave me a queasy feeling, though: it reminded me of when Joe Schafer and I were grad students and went to a session of cranks at the Joint Statistical Meetings. (Until a few years ago, the JSM used to accept all submissions, and they would schedule the obvious cranks, the people who could prove that pi=3 and so forth, for the last session of the conference.) Anyway, Joe and I showed up and sat at the back of the room. It was basically us and the speakers, maybe one or two other people. We attended a couple of talks. The speakers really were clueless. They were people with real jobs, I think, but with delusions of mathematical grandeur. At some point, Joe and I couldn't stop from cracking up. It was just too much trouble to swallow our laughs, and we had to leave. Then we felt terrible, of course. It's a good thing that the JSM rejects these talks now.

The funny thing is, in the last few years I've been to a couple of real sessions at the JSM at the last time on the last day. And the attendence for these real sessions has been almost as low as for the fake one that Joe and I attended.

Phil writes,

I [Phil] recently read a book called "Becoming a Tiger," about animal learning. It has lots and lots of short pieces, arranged by theme; light reading, but very informative; includes references if one wanted to follow up.Perhaps my favorite story is about otters. Back in the 60s (I think it was...might have even been 50s) the received wisdom among animal researchers was that animals learn only by operant conditioning: they do what they're rewarded for, they don't do what gets them punished, and that's it. Anyway, this woman is working with otters. She wants to train it to climb onto a box. She puts a box in its cage, it eventually climbs on, and she gives it a treat. It gets very excited, jumps down, runs around the cage, and gets back up on the box. She gives it another treat. Such thrills! It jumps down, and jumps back on the cage...and this time it stands on only three legs. She gives it a treat anyway. It jumps down, jumps back up, and lies on its back. And so on. It tries variations: putting just its front legs on the box, putting just its back legs on the box, etc.

Later, the researcher tells this story to some visiting scientists. They don't believe her: "an animal won't do something that might not get it a reward, if it can do something else that will get it a reward." She takes them to see her otter. She holds a hoop under water in the otter's pool. It swims around, looks at it, touches it...and eventually swims through it. She gives it a treat. Excitement! It swims through it again...another treat. It swims through it again, and grabs it out of her hand. No treat. It drops the hoop. She holds it up again. The otter swims through upside down. Treat. Try again, the otter swims through backwards. No treat. And so on. The otter kept on experimenting. One of the visiting researchers said "it takes years for me to train my grad students to be this creative."

Anyway, the book is full of stories of animals doing amazing things, as you might expect. But it also is full of stories of animals doing mental feats that humans can't do, which I did not expect. For example, supposedly a common element on human IQ tests is to look at the sketch of an object and pick which of several choices represents the same object viewed from a different angle. Trained pigeons can do this about as well as trained college students, but the pigeons can do it faster.

I think there could be a fun "reality TV" game show based on this. You would select a human team: an artist, an infant, a brainiac college student, and so on. You would have a series of tasks for them to do, and they would get to choose someone to compete in the task. Some of the tasks would be right up their alley...like, you'd have a basketweaver, and presumably the team would pick him to compete in the basketweaving contest, where he has 3 hours to make an artistic basket out of a pile of straw. He can probably do something pretty cool. but the bowerbird is going to kick his ass. You could find someone who is famous for having a great memory, and they might do pretty well burying 200 small objects in a field and coming back a week later to retrieve them...but some kinds of birds can bury literally thousands of nuts and seeds and find them months later, so this is not going to be a contest either. And in the pigeon vs the college kid in the "object rotation" test, the pigeon will have a slight edge.

John Donohue sent me this paper reviewing evidence about the deterrent effect of the death penalty. I recommend the Donohue and Wolfers paper highly--at least on the technical side, it's the paper I would like to have written on this topic, if I had been able to go through all the details on the many recent papers on the topic. I can't really comment on their policy recommendations but have some futher thoughts on the death-penalty studies from a statistical perspective.

I'll first give the key paragraph of the conclusion of their paper, then give my comments:

We [Donohue and Wolfers] have surveyed data on the time series of executions and homicides in the United States, compared the United States with Canada, compared non-death penalty states with executing states, analyzed the effects of the judicial experiments provided by the Furman and Gregg decisions comparing affected states with unaffected states, surveyed the state panel data since 1934, assessed a range of instrumental variables approaches, and analyzed two recent state-specific execution moratoria. None of these approaches suggested that the death penalty has large effects on the murder rate. Year-to-year movements in homicide rates are large, and the effects of even major changes in execution policy are barely detectable. Inferences of substantial deterrent effects made by authors examining specific samples appear not to be robust in larger samples; inferences based on specific functional forms appear not to be robust to alternative functional forms; inferences made without reference to a comparison group appear only to reflect broader societal trends and do not hold up when compared with appropriate control groups; inferences based on specific sets of controls turn out not to be robust to alternative sets of controls; and inferences of robust effects based on either faulty instruments or underestimated standard errors are also found wanting.

My thoughts

My first comment is that death-penalty deterrence is a difficult topic to study. The treatment is observational, the data and the effect itself are aggregate, and changes in death-penalty policies are associated with other policy changes. (In contrast, my work with Jim Liebman and others on death-penalty reversals was much cleaner--our analysis was descriptive rather than causal, and the problem was much more clearly defined. This does not make our study any better, but it certainly made it easier.) Much of the discussion of the deterrence studies reminds me of a little-known statistical principle, which is that statisticians (or, more generally, data analysts) look best when they are studying large, clear effects. This is a messy problem, and nobody is going to come out of it looking so great.

My second comment is that a quick analysis of the data, at least since 1960, will find that homicide rates went up when the death penalty went away, and then homicide rates declined when the death penalty was re-instituted (see Figure 1 of the Donohue and Wolfers paper), and similar patterns have happened within states. So it's not a surprise that regression analyses have found a deterrent effect. But, as noted, the difficulties arise because of the observational nature of the treatment, and the fact that other policies are changed along with the death penalty. There are also various technical issues that arise, which Donohue and Wolfers discussed.

Also some quick comments:

- Figure 1 should be two graphs, one for homicide rate and once for execution rate.

- Figure 2 should be 2 graphs, one on top of the other (so time line is clear), one for the U.S. and one for Canada. Actually, once Canada is in the picture, maybe consider breaking U.S. into regions, since the northern U.S. is perhaps a more reasonable comparison to Canada.

- Figure 8 qould be much clearer as 12 separate time series in a grid (with common x- and y-axes). It's hopeless to try to read all these lines. With care, you could fit these 12 little plots in the exact same space as this single graph.

Another view

Here's an article by Joanna Shepherd stating the position that the death penalty deters homicides in some states but not others. Their story is possible but it seems to me (basically, for the reasons discussed in the Donohue and Wolfers paper) not a credible extrapolation from the data.

The death penalty as a decision-analysis problem?

Policy questions about the death penalty have sometimes been expressed in terms of the number of lives lost or saved by a given sentencing policy. But I think this direction of thinking might be a dead end. First off, as noted above, it may very well be essentially impossible to statistically estimate the net deterrent effect of death sentencing--what seem like the "hard numbers" (in Richard Posner's words, "careful econometric analysis") aren't so clear at all.

More generally, though, I'm not sure how you balance out the chance of deterring murders with the chance of executing an innocent person. What if each death sentence deterred 0.1 murder, and 5% of people executed were actually innocent? That's still a 2:1 ratio (assuming that it's OK to execute the guilty people). Then again, maybe these innocent people who were executed weren't so innocent after all. But then again, not every murder victim is innocent either. Conversely, suppose that executing an innocent person were to deter 2 murders (or, conversely, that freeing an innocently-convicted man were to un-deter 2 murders). Then the utility calculus would suggest that it's actually OK to do it. In general I'm a big fan of probabilistic cost-benefit analyses (see, for example, chapter 22 of my book), but here I don't see it working out. The main concerns--on the one hand, worry about out-of-control crime, and on the other hand, worry about executing innocents--just seem difficult to put on the same scale.

Finally, regarding decision analysis, incentives, and so forth: much of the discussion (not in the Donohoe and Wolfers paper, but elsewhere) seems to go to the incentives of potential murderers. But the death penalty also affects the incentives of judges, juries, prosecutors, and so forth. One of the arguments in favor of the death penalty is that it sends a message that the justice system is serious about prosecuting murders. This message is sent to the population at large, I think, not just to deter potential murderers but to make clear that the system works. Conversely, one argument against the death penalty is that it motivates prosecutors to go after innocent people, and to hide or deny exculpatory evidence. Lots of incentives out there.

Kjetil Halvorsen linked to this story, "Research cheats may be jailed," from a Norwegian newspaper:

State officials have been considering imposing jail terms on researchers who fake their material, but the proposal hasn't seen any action for more than a year. More is expected now, after a Norwegian doctor at the country's most prestigious hospital was caught cheating in a major publication. . . . The survey allegedly involved falsification of the death- and birthdates and illnesses of 454 "patients." The researcher wrote that his survey indicated that use of pain medication such as Ibuprofen had a positive effect on cancers of the mouth. He's now admitted the survey was fabricated and reportedly is cooperating with an investigation into all his research.

And here's more from BBC News:

Norwegian daily newsaper Dagbladet reported that of the 908 people in Sudbo's study, 250 shared the same birthday.

Slap a p-value on that one, pal.

Speaking as a citizen, now, not as a statistician, I don't see why they have to put these people in jail for a year. Couldn't they just give them a big fat fine and make them clean bedpans every Saturday for the next 5 years, or something like that? I would think the punishment could be appropriately calibrated to be both a deterrent and a service, rather than a cost, to society.

P.S. Update here.

Latest update from the front lines of the crisis in scientific education:

Curious George's Big Book of Curiosities has a picture of a rainbow, with the following colors, in order: red, yellow, bue, green. What the . . . ?

They also have a page of Shapes, which includes a circle, a triangle, a rectangle, an oval, and two different squares, one of which is labeled "square"--the other is labeled "diamond." So much for rotation-invariance!

Duncan Watts is a colleague of mine in the sociology department at Columbia. He is a very active researcher in the area of social networks and runs a fascinating seminar. Anyway, he and his student, Gueorgi Kossinets, recently published a paper in Science, entitled "Empirical analysis of an evolving social network." He then had an annoying interaction with Helen Pearson, a science reporter who writes a column for Nature online. Duncan writes:

I [Duncan Watts] was particularly pleased when Ms. Pearson called me last week, expressing her interest in writing a story for Nature's online news site. Having read Philip Ball's careful and insightful reports for years, I imagined that Nature News would be a great opportunity for us to have a substantive but accessible news story written about our work. And after speaking with Ms. Pearson for about two hours on the phone, over two consecutive days, sending her some additional reading material, and recommending (at her request) a number of other social network researchers she could talk to, I felt pretty confident that we would have exactly that. She asked lots of questions, seemed intent on understanding my responses, and generally acted like a real science journalist.So imagine my surprise when monday morning I saw that our work had been characterized as "bizarre" and "pointless" in a derisive fluff piece by a fictional columnist.

Well, any publicity is good publicity, and all that. As I told Duncan, having a paper in Science is much much more of a plus than this silly article is a minus. If anything, it might get people to read the article and see that it has some cool stuff inside. I think the people who we respect will have much more respect for a peer-reviewed article in a top journal than for a silly pseudo-populist column.

On the other hand, what a waste of space--well, it's an online publication, so there are no real space restrictions. But what a waste of Duncan's time and energy. Basically, Pearson is trading on the reputation of Nature, and her own reputation as a science journalist. To pretend to be writing a serious story and then to mock, is simply unethical. Why not simply be honest--if she wants to mock, just say so upfront, or just write the article without wasting Duncan's time?

What about my own experiences in this area? I generally want my work to be publicized--why do the work if nobody will hear about it?--and so I'm happy to talk to reporters. I've tried to avoid the mockers, however. When Seth and I taught our freshman seminar on left-handedness several years ago at Berkeley, we were contacted by various news organizations in the San Francisco area. At one point a TV station wanted to send a crew into the class, but they decided not to after I explained that the class mostly involved readings from the scientific literature, not original research. Also, one publication that contacted us talked with Seth first, and he got the sense that they were just fishing for quotes for a story they wanted to write on stupid college classes, or something like that. So I avoided talking with them.

Also, I was mocked in the House of Commons once! Well, not by name, but in 1994, I think it was, I spoke at a conference in England on redistricting, to tell them of our findings about U.S. redistricting. Someone told me that a parliamentarian with the Dickensian name of Jack Straw had mocked our paper, which was called Enhancing Democracy Through Legislative Redistricting. But then someone else told me that being mocked by Jack Straw was kind of a badge of honor, so I don't know.

Anyway, it's too bad to see the reputation of Nature Online diminished in this way, and I hope Duncan has better luck in his future dealings with the press.

P.S.

See here for a funnier version of Pearson's article with bits such as "needed to hammer home the 'duh' point a bit here I think. Ok?". I also like that in this version she describes the journal Science as "one of my very favourite reads." I mean, I like research papers as much as the next guy, but I have to admit that I would't usually curl up in my favorite (whoops, I mean "favourite") chair with a science journal.

Seth wrote an article, "Three Things Statistics Textbooks Don’t Tell You." I don't completely agree with the title (see below) but I pretty much agree with the contents. As with other articles by Seth, there are lots of interesting pictures. My picky comments are below.

Gregor Gorjanc writes,

Joost van Ginkel writes,

Gary King, along with Bernard Grofman, Jonathan Katz, and myself, wrote an amicus brief for a Supreme Court case involving the Texas redistricting. Without making any statement on the redistricting itself (as noted in the brief, I've never even looked at a map of the Texas redistricting, let alone studied it in any way, quantitative or otherswise), we make the case that districting plans can be evaluated with regard to partisan bias, and that such evaluation is uncontroversial in social science and does not require any knowledge or speculation about the intent of the redistricters.

The key concept, as laid out in the brief, is to identify partisan bias with deviation from symmetry. I'll quote briefly from the brief (which is here; see also Rick Hasen's election law blog for more links) and then mention a couple additional points which we didn't have space there to elaborate on. I'm interested in this topic for its own sake and also because Gary and I put a lot of effort in the early 90s into figuring this stuff out).

Here's what we wrote on partisan symmetry in the amicus brief:

The symmetry standard measures fairness in election systems, and is not specific to evaluating gerrymanders. The symmetry standard requires that the electoral system treat similarly-situated political parties equally, so that each receives the same fraction of legislative seats for a particular vote percentage as the other party would receive if it had received the same percentage. In other words, it compares how both parties would fare hypothetically if they each (in turn) had received a given percentage of the vote. The difference in how parties would fare is the “partisan bias” of the electoral system. Symmetry, however, does not require proportionality.For example, suppose the Democratic Party receives an average of 55% of the vote total across a state’s district elections and, because of the way the district lines were drawn, it wins 70% of the legislative seats in that state. Is that fair? It depends on a comparison with the opposite hypothetical outcome: it would be fair only if the Republican Party would have received 70% of the seats in an election where it had received an average of 55% of the vote totals in district elections. This electoral system would be biased against the Republican Party if it garners anything fewer than 70% of the seats and biased against the Democratic Party if the Republicans receive any more than 70%.

A couple of other things we didn't have space to include

1. To measure symmetry, both parties need to be in contention. For example, there's no way to determine if the districting system is "fair" to a third party that gets 20% of the vote, since it's unrealistic to extrapolate to what would happen if the Democrats and Republicans get 20% of the vote. This connects to the idea that the "ifs" needed to evaluate partisan bias are based on extrapolation rules that are extensions of uniform partisan swing. If one party is always getting 70% of the statewide vote, our symmetry measure isn't so relevant.

2. Our measure (and related measures) are not about "gerrymandering" at all (in the sense that "gerrymandering" refers to deliberate creation of wacky discricts) but rather are measures of symmetry (and, by implication, fairness) of the electoral system.

Thus, one could crudely imagine a 2 x 2 grid, defined by "gerrymandering / no-gerrymandering" and "symmetric / not-symmetric". It's easy to imagine districting plans in all four of these quadrants. We're saying that the social-science standard is to look at symmetry, not at gerrymandering--thus, at outcomes, not intentions, and in fact, at state-level outcomes, not district-level intentions. This is a huge point, I think.

I received the following one-sentence email from a Ph.D. statistician who works in finance:

For every one time I use stochastic calculus I use statistics 99 times.

Not that stochastic calculus isn't important...after all, I go to the bathroom more often than I use statistics, but that doesn't mean we need Ph.D. courses in pooping...but still, it says something, I think.

Chris Paulse pointed me to this magazine article entitled "Bayes rules: a once-neglected statistical technique may help to explain how the mind works," featuring a paper by Thomas Griffiths and Joshua Tenenbaum (see quick review here by Michael Stastny). The paper examines the ability of people to use partial information "make predictions about the duration or extent of everyday phenomena such as human life spans and the box-office take of movies." Griffiths and Tenenbaum find that many people's predictions can be modeled as Bayesian inferences.

This is all fine and interesing. Based on my knowledge of other experiments of this sort, I'm a bit skeptical--I suspect that different people use different sorts of reasoning to make these predictive estimates--but of course this is why psychologists such as Griffiths and Tenenbaum do research in this area.

Historical background

But I do have a problem with the article about this study that appeared in the Economist magazine. The article makes a big deal about the differences between the "Bayesian" and "frequentist" schools of statistics. FIrst off, I'm surprised that they think that frequentist methods "dominate the field and are used to predict things as diverse as the outcomes of elections and preferences for chocolate bars." They should take a look at some recent issues of JASA or at the new book by Peter Rossi, Greg Allenby, and Rob McCulloch on Bayesian statistics and marketing. More generally, they don't seem to realize that psychologists have been modeling decision making as Bayesians for decades--that's the basis of the "expected utility model" of von Neumann etc.

The statistical distinction between "estimation" and "prediction"

But I have a more important point to make--or, at least, a more staistical point. Classical statistics distinguishes between estimation and prediction. Basically, you estimate parameters and you predict observables. This is not just a semantic distinction. Parameters are those things that generalize to future studies, observables are ends in themselves. If the joint distribution of all the knowns and unknowns is written as a directed acycllic graph, the arrows go from parameters to observables and not the other way around. Or, to put it another way, one instance of a parameter can correspond to many observables.

Anyway, frequentist statistics treats esitmation and prediction differently. For example, in frequentist statistics, theta.hat is an unbiased estimate if E(theta.hat|theta) = theta, for any theta. But y.hat is an unbiased prediction if E(y.hat|theta) = E(y|theta) for any theta. Note the difference: the frequentist averages over y, but not over theta. (See pages 258-249, and the footnote on page 411, of Bayesian Data Analysis (second edition) for more on this.) Frequentist inference thus makes this technical distinction between estimation and prediction (for example, consider the terms "best linear unbiased estimation" and "best linear unbiased prediction").

Unfair to frequentists

Another way of putting this is: everybody, frequentists and Bayesians alike, agree that it's appropriate to be Bayesian for predictions. (This is particularly clear in time series analysis, for example.) The debate arises over what to do with estimation: whether or not to average over a distribution for those unknown thetas. So the Economist is misleading in describing the Griffiths/Tenenbaum result as a poke in the eye to frequentists. A good frequentist will treat these problems as predictions and apply Bayesian inference.

(Although, I have to admit, there is some really silly frequentist reasoning out there, such as the "doomsday argument"; see here and here, and here.)

1. Figure out your educational goals--what you want your students to be able to do once the semester is over. Write the final exam--something that you think the students should be able to do when the course is over, and that you think the students will be able to do.

2. Show the exam to some colleagues and former students to get a sense of whether it is too hard (or, less likely, too easy). Alter it accordingly.

3. Structure the course around the textbook. Even if it's not such a great textbook, do not cover topics out of order, and avoid skipping chapters or adding material except where absolutely necessary.

3. Set up the homework assignments for each week. Make sure that these include lots of practice in the skills needed to pass the exam.

4. Figure out what the students will need to learn each week to do the homeworks. Don't "cover" anything in class that you will not be having them do in hwks and exam. (You can do whatever you want in class, but if the students don't practice it, you're not really "covering" it.)

5. Write exams and homeworks so they are easy to grade. I also recommend having a short easy quiz every week in class. The students need lots of practice. This is at all levels, not just intro classes.

6. Every lecture, reinforce the message of what skills the students need to master. (To get an idea of how the students are thinking, picture yourself studying something you're not very good at but need to learn (swimming? Spanish? saxophone?). There are some skills you want to learn, you want to practice, but it's hard, and it helps if you are cajoled and otherwise motivated to continue.)

This is not the only way to go, of course; it's just my suggestion for a relatively easy way to get started and not get overwhelmed. Especially remember point 1 about the goals.

Some helpful resources:

First Day to Final Grade, by Curzan and Damour. This is nominally a book for graduate students but actually is relevant for all levels of teaching. Especially since I think that "lectures" should be taught more like "sections" anyway.

Something I read about legal consulting made me think about statistical consulting.

Don Boudreaux (an economist) points to an op-ed by Josh Sheptow (a law student) who argues that "pro bono work by elite lawyers is a staggeringly inefficient way to provide legal services to low-income clients." Basically, he says that, instead of doing 10 hours of pro-bono work for a legal-aid society, a $500/hr corporate lawyer would be better off just working 10 more hours at the law firm and then donating some fraction of the resulting $5000 to the legal aid society. Sheptow writes, "For lawyers who have done pro bono work, cutting a check might not seem as glamorous as getting out in the trenches and helping low-income clients face to face, but it's a much more efficient way to deliver legal services to those in need." The discussants at Boudreaux's site make some interesting points, and I don't have much to add to the argument one way or another. But it did make me think about statistical consulting.

Statistical consulting

I have two consulting rates: a high rate for people who can afford it (and are willing to pay) and zero otherwise. Also, I'll give free consulting to just about anybody who walks in the door. My main criteria for the free consulting is that they're doing something which seems socially useful (i.e., it's something that I'd rather see done well than done poorly) and that they're planning to listen to my advice. (It's soooo frustrating to give lots of suggestions and then be ignored. Once nice thing about being paid is that then they usually don't ignore you.) For paid consulting, I like the project to be interesting, and then, to be honest, I'm less stringent on the "socially useful" part--I just don't want to work for somebody who seems to be doing something really bad. (That's all from my perspective. I have no objection if other statisticians consult for organizations that I don't like.)

Oh yeah, and once I declined to consult for a waste-management company located in Brooklyn. Lost out on a chance for some interesting life experience there, I think.

Anyway, I don't really do any "pro bono" work that is comparable to providing legal services for indigents. I'm not quite sure what the equivalent would be, in statistical work. Regression modeling and forecasting for nonprofits? Statistical consulting in some legal cases? I don't know what tradition there is of public-service work in statistics. Of course I'd like to believe that almost all my work is public service, in some sense--giving students tools to work more effectively, participating in scientific research projects, and so forth--but that's not quite the same thing. And yes, we give to charity (thus following the "Sheptow" strategy), but I do think that, ideally, there'd be some more direct public-service aspect to what we do.

I blogged on this awhile ago and then recently Hal Stern and I wrote a little more on the topic. Here's the paper, and here's the abstract:

A common error in statistical analyses is to summarize comparisons by declarations of statistical significance or non-significance. There are a number of difficulties with this approach. First is the oft-cited dictum that statistical significance is not the same as practical significance. Another difficulty is that this dichotomization into significant and non-significant results encourages the dismissal of observed differences in favor of the usually less interesting null hypothesis of no difference.Here, we focus on a less commonly noted problem, namely that changes in statistical significance are not themselves significant. By this, we are not merely making the commonplace observation that any particular threshold is arbitrary--for example, only a small change is required to move an estimate from a 5.1% significance level to 4.9%, thus moving it into statistical significance. Rather, we are pointing out that even large changes in significance levels can correspond to small, non-significant changes in the underlying variables. We illustrate with a theoretical and an applied example.

The paper has a nice little multiple-comparisons-type example from the biological literature--an example where the scientists ran several experiments and (mistakenly) characterized them by their levels of statistical significance.

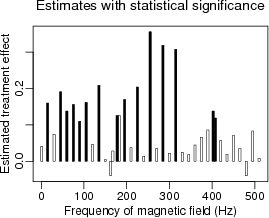

Here's the bad approach:

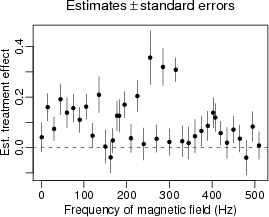

Here's something better which simply displays esitmates and standard errors, letting the scientists draw their own conclusions from the overall pattern:

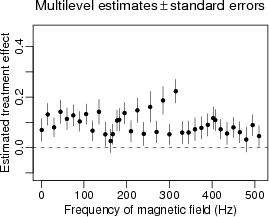

Here's something slighlty better, using multilevel models to get a better esitmate for each separate experiment:

The original paper where these data appeared was really amazing in the extreme and unsupported claims it made based on non-significant differences in statistical significance.

As part of a "carbon trading" program, a program is being instituted to reduce energy use for streetlights in a developing country. Here's how it works: (1) "baseline" energy use is established for the existing street light system, (2) some of the lights will be replaced with new lights that are more energy efficient and will thus consume less energy, and (3) the company that does the installation will be reimbursed based on the reduction in consumption. (No reduction, no money).

Simple enough on paper, but we live in a messy world. For example, the electricity provided by the grid is often substantially below the nominal voltage, so the existing lamps (which do not include voltage regulators) often put out much less light than they should, but also consume less electricity than they should. The new lights include voltage regulators so they always operate at their nominal power consumption. It's entirely possible that replacing the old lights with the new ones will increase the light output but generate no energy savings (or even negative savings) and thus no reduction in carbon dioxide production.

One possibility would be to use new lamps that have the same light output as the current lamps, rather than the same nominal energy consumption. But it's not clear that the municipalities involved will agree to that, for one thing. (For instance, the voltage that is provided varies with time, so even though the existing lamps often operate well below their nominal light output, they sometimes do achieve it). Also, lamps only come in discrete steps of light output, so there may be no way to provide the same amount of light as is currently provided.

Another problem --- the one that prompted this blog entry --- is how to establish the baseline energy use, and determine the energy savings of the replacement lamps. Lamps are not individually metered, although meters can be installed temporarily (at some expense). The actual energy consumption of an existing lamp, and its light output, depend on the lamp's age and on the voltage that it gets. As mentioned above, the voltage varies with time...but it does so differently for different lamps, depending on the distance from the power plant and on the local electric loads. There seem to be no existing records on voltage-vs-time for any locations, much less for the large number of towns that might participate in this program.

Louis Menand writes in the New Yorker about literary prizes:

The Nobel Prize in Literature was the first of the major modern cultural prizes. It was soon followed by the Prix Goncourt (first awarded in 1903) and the Pulitzer Prizes (conceived in 1904, first awarded in 1917). The Academy of Motion Picture Arts and Sciences started handing out its prizes in 1929; the Emmys began in 1949, the Grammys in 1959. Since the nineteen-seventies, English says, there has been an explosion of new cultural prizes and awards. There are now more movie awards given out every year—about nine thousand—than there are new movies, and the number of literary prizes is climbing much faster than the number of books published. . . .[James] English [author of "The Economy of Prestige"] interprets the rise of the prize as part of the “struggle for power to produce value, which means power to confer value on that which does not intrinsically possess it.” In an information, or "symbolic," economy, in other words, the goods themselves are physically worthless: they are mere print on a page or code on a disk. What makes them valuable is the recognition that they are valuable. This recognition is not automatic and intuitive; it has to be constructed.

That's all fine, but I wonder if there's something else going on, analogous to grade inflation: awards benefit the award-giver and the award-receiver. For example, the statistics department at Columbia instituted a graduate teaching award a few years ago. This is a win-win situation: the student who gets the award is happy about the recognition and can put it on his or her resume, but also it's good for the department--it can motivate all, or at least several, of our teaching assistants to try harder (to the extent that the award is transparently and fairly administered), and it can help our best graduate students get top jobs, which in turn is good for the department also. Similarly, awards within an academic discipline can motivate and recognize good work and also provide publicity and book sales for the field as a whole. And, unlike the Oscars, Nobel Prizes, etc., these little awards can be cheap to administer.

So, as with grade inflation, the immediate motivation of all parties is to increase the number of awards. But it can get out of control! For example, here is Princeton University Press's list of its books from 2005 that received awards. It's a looong list, including an amazing 13 different political science books that received a total of 18 awards. This many awards all in one place devalues all of them a bit, I think. (Not that I'm too proud to claim what awards I receive, of course...)

The real question?

Thus maybe the real question is not, Why so many awards?, but rather, Why is the number of awards increasing? If giving awards has always been such a great idea, why haven't there always been so many awards? I don't know, just as I don't know why there isn't more grade inflation, or why there aren't more campaign contributions.

P.S.

See here for other comments on this topic by Mark Thoma (link from New Economist. Thoma talks about awards as a signal to consumers, whereas I'm thinking more about the motivation of the organizations that give the awards.

Thoma's comments are interesting although I don't really know what he gets out of saying "A good is valuable because it yields utility." Isn't that just circular reasoning? Or is this just a truism that economists like to say, just as statisticians have our own cliches ("correlation is not causation," "statisitcal significance is not the same as practical significance," and so forth)?

I've been learning more about the lmer function (part of Doug Bates's lme4 package) in R--it's just so convenient, I think I'll be using it more and more as a starting point in my analyses (which I can then check using Bugs and, as necessary, Umacs). Anyway, I came across this interesting recent discussion in the R help archive:

I just finished Bernard Crick's wonderful 1980 biography of George Orwell. Lots of great stuff. Here's something from page 434:

Just before they moved to Mortimer Crescent [in 1942], Eileen [Orwell's wife] changed jobs. She now worked in the Minstry of Food preparing recipes and scripts for 'The Kidchen Front', which the BBC broadcast each morning. These short programmes were prepared in the Ministry because it was a matter of Government policy to urge or restrain the people from eating unrationed foods according to their official estimates, often wrong, of availability. It was the time of the famous 'Potatoes are Good For You' campaign, with its attendant Potato Pie recipes, which was so successful that another campaign had to follow immediately: 'Potatoes are Fattening'.

Crick continues,

Several studies have been performed in the last few years looking at the economic decisions of parents of sons, as compared to parents of daughters. For example, Tyler Cowen links to a report of a study by Andrew Oswald and Nattavudh Powdthavee that "provides evidence that daughters make people more left wing. Having sons, by contrast, makes them more right wing":

Professor Oswald and Dr Powdthavee drew their data from the British Household Panel Survey, which has monitored 10,000 adults in 5,500 households each year since 1991 and is regarded as an accurate tracker of social and economic change. Among parents with two children who voted for the Left (Labour or Lib Dem), the mean number of daughters was higher than the mean number of sons. The same applied to parents with three or four children. Of those parents with three sons and no daughters, 67 per cent voted Left. In households with three daughters and no sons, the figure was 77 per cent.

I've seen some other studies recently with similar findings--a few years ago, a couple of economists found that having daughters, as compared to sons, was associated with the probability of divorce, I think it was, and recently a study by Ebonya Washington found that for Congressmembers, those with daughters (as compared to sons) were more likely to have liberal voting records on women's issues.

Controlling for the number of children: an intermediate outcome

A common feature of all these studies is that they control for the total number of children. This can be seen in the quote above, for example: they compare different sorts of families with 2 kids, then make a separate comparison of different sorts of families with 3 kids.

At first sight, controlling for the total number of children seems reasonable. There is a difficulty, however, in that the total number of kids is an intermediate outcome, and controlling for it (whether by subsetting the data based on #kids or using #kids as a control variable in a regression model) can bias the estimate of the causal effect of having a son (or daughter).

To see this, suppose (hypothetically) that politically conservative parents are more likely to want sons, and if they have two daughters, they are (hypothetically) more likely to try for a third kid. In comparison, liberals are more likely to stop at two daughters. In this case, if you look at data on families with 2 daughters, the conservatives will be underrepresented, and the data could show a correlation of daughters with political liberalism--even if having the daughters has no effect at all!

A solution

A solution is to apply the standard conservative (in the statistical sense!) approach to causal inference, which is to regress on your treatment variable (sex of kid) but controlling only for things that happen before the kid is born. For example, one could compare parents whose first child is a girl to parents whose first child is a boy. One can also look at the second birth, comparing parents whose second child is a girl to those whose second child is a boy--controlling for the sex of the first child. And so on for third child, etc.

The modeling could get interesting here, since there is a sort of pyramid of coefficients (one for the first-kid model, two for the second-kid model (controlling for first kid), and so forth). It might be reasonable to expect coefficients to gradually decline (I assume the effect of the first kid would be the biggest), and one could estimate that with some sort of hierarchical model.

Summary

I'm not saying that all these researchers are wrong; merely that, by controlling for an intermediate outcome, they're subject to a potential bias. Also they could redo their analyses without much effort, I think, to fix the biases and address this concern. I hope they do so (and inform me of their results).

It's an interesting example because we all know not to control for intermediate outcomes, but the total # of kids somehow doesn't look like that, at first.

P.S.

See here for more discussion of the U.K. voting example.

I was trying to draw Bert and Ernie the other day, and it was really difficult. I had pictures of them right next to me, but my drawings were just incredibly crude, more "linguistic" than "visual" in the sense that I was portraying key aspect of Bert and Ernie but in pictures that didn't look anything like them. I knew that drawing was difficult--every once in awhile, I sit for an hour to draw a scene, and it's always a lot of work to get it to look anything like what I'm seeing--but I didn't realize it would be so hard to draw cartoon characters!

This got me to thinking about the students in my statistics classes. When I ask them to sketch a scatterplot of data, or to plot some function, they can never draw a realistic-looking picture. Their density functions don't go to zero in the tails, the scatter in their scatterplots does not match their standard deviations, E(y|x) does not equal their regression line, and so forth. For example, when asked to draw a potential scatterplot of earnings vs. income, they have difficulty with the x-axis (most people are between 60 and 75 inches in height) and having the data consistent with the regression line, while having all earnings be nonnegative. (Yes, it's better to model on the log scale or whatever, but that's not the point of this this exercise.)

Anyway, the students just can't make these graphs look right, which has always frustrated me. But my Bert and Ernie experience suggests that I'm thinking of it the wrong way. Maybe they need lots and lots of practice before they can draw realistic functions and scatterplots.

I commented a few weeks ago on Andy Nathan's review of a recent biography of Mao. Beyond the inherent interest of the subject matter, Nathan's review was interesting to me because it explored questions of the reliability of historical evidence.

Those of you who are interested in this sort of thing might be interested in the following exchange of letters in a recent issue of the London Review of Books. It's an entertaining shootout between Jung Chang and Jon Halliday on one side, and Andy Nathan on the other.

Recent studies by police departments and researchers confirm that police stop racial and ethnic minority citizens more often than whites, relative to their proportions in the population. However, it has been argued that stop rates more accurately reflect rates of crimes committed by each ethnic group, or that stop rates reflect elevated rates in specific social areas such as neighborhoods or precincts. Most of the research on stop rates and police-citizen interactions has focused on traffic stops, and analyses of pedestrian stops are rare. In this paper, we analyze data from 125,000 pedestrian stops by the New York Police Department over a fifteen-month period. We disaggregate stops by police precinct, and compare stop rates by racial and ethnic group controlling for previous race-specific arrest rates. We use hierarchical multilevel models to adjust for precinct-level variability, thus directly addressing the question of geographic heterogeneity that arises in the analysis of pedestrian stops. We find that persons of African and Hispanic descent were stopped more frequently than whites, even after controlling for precinct variability and race-specific estimates of crime participation.

Here's the paper (by Jeff Fagan, Alex Kiss, and myself) with the details. The work came out of a project we did with the New York State Attorney General's Office a few years ago.

If you're interested in this topic, you might also take a look at Nicola Persico's page on police stops data. (Our dataset had confidentiality restrictions so we couldn't place it on Nicola's site.)

Mauro Caputo writes,

I was searching on-line for some useful tutorials on Dempster-Shafer theory. I need to get up to speed quickly on this area to see if I can apply it to a particular problem. I found your paper on "The boxer, the wrestler, and the coin flip", and kinda got stuck on the belief functions section. Is there an undergrad textbook or tutorial paper on Dempster-Shafer that I could use to teach myself enough to understand and apply DST? I haven't been able to find one.

I referred him to the references in my paper but perhaps someone knows of something more recent and more applied than these references?

Yingnian writes,

I accumulated quite a number of intuitions about Monte Carlo in particular, and statistics in general, for teaching purpose. I think they can be made obvious to elementary school children.MCMC can be visualized by a population of say 1 million people immigrating from state to state (e.g., 51 states on US territory) where transition prob is like the fraction of people in California who will move to Texas the next day. So p^{(t)} is a movie of population distribution over time. If all 1 million people start from California, the population will spread over all 51 states, and stabilize at a distribution which is the stationary distribution.

Metropolis-Hastings is just a treaty between the visa offices of any two countries x and y. It can be any c(x, y) = c(y, x), and the fraction of people who get the visa is c(x, y)/pi(x)T(x, y), since pi(x)T(x, y) is the number of people who go to apply for visa. To choose c(x, y) = min(pi(x)T(x, y), pi(y)T(y, x)) is just to maximize the immigration flow. Here the detailed balance means there is a balance between any two states, like China and US. There are more Chinese applying visa to US than vice versa, so we can let all US people go to China, but only accept a fraction of Chinese people to go to US (I just use this example for the benefit of intuition, without any disrespect for US or Chinese people). This is the acceptance probability.

Adi Wyner, Dean Foster, Shane Jensen, and Dylan Small at the University of Pennsylvania have started a new statistics blog, Politically Incorrect Statistics. My favorite entry so far compares string theory to intelligent design. While I'm linking, the Journal of Obnoxious Statistics looks like fun, although I haven't read many of the 101 pages yet.

When I tell people about my work, by far the most common response is "Oh, I hated statistics in college." We've been over that before. Sometimes someone will ask me to explain the Monty Hall problem. Anyway, another one I've been getting a lot lately is whether I watch the show Numbers. I've never seen it (I don't have cable), but I'm a little curious about it--does anyone out there watch it? Is it any good? Just wondering....

My friend Mark Glickman (I call him Glickman; Andrew calls him Smiley) has some fun statistical song parodies. When I was taking Bayesian Data Analysis in graduate school, he came in as a guest lecturer one day and sang them for our class. It was really fun--I don't think there's anywhere near enough silliness in most statistics classes. Click here for some music and lyrics.

Dan Ho, Kosuke Imai, Gary King, and Liz Stuart have a new paper on matching methods for causal inference. It has lots of practical advice and interesting examples, and I predict that it will be widely read and cited. Check it out here.

...and on a completely unrelated note, Happy Birthday, Mom!!

I was telling my class the other day about the Gibbs sampler and Metropolis algorithm and was describing them as random walks--for example, with the Gibbs sampler you consider a drunk who is walking at random on the Manhattan street grid (in the hypothetical world in which the Manhattan street grid is rectilinear). But then I realized that's not right, because a Gibbs jump can go as far as necessary in any direction--it is not limited to one step. It's actually the path of a drunken rook! From there, it's natural to think of the Metropolis algorithm as a drunken knight. Or maybe a drunken king, if the jumps are small.

To take this analogy further: parameter expansion (i.e., redundant parameterization) allows rotations of parameter space, thus allowing the rooks to move diagonally as well as rectilinearly--thus, a drunken queen! I don't know if these analogies helped the students, but I like them.

Encouraged by Carrie's plug, I read Leslie Savan's book, "Slam Dunks and No Brainers":

It's an entertaining and thought-provoking look at "pop language," which are a particular kind of enjoyable and powerful cliche that we use in speech (and sometimes in writing) to convey "attitude" (that is, 'tude). I'm not quite sure where the boundary falls between rote phrases (e.g., "entertaining and thought-provoking"), cliches (e.g., "it was raining like hell out there"), pop language (e.g., "chill out, dude"), and jokes (e.g., "he's not the sharpest tool in the shed"). But I think the idea is that pop phrases are sometimes just fun to say (sort of like the linguistic equivalent of putting on a fancy outfit or driving a sports car) or pwerful ("chill out" is hard to respond to!). Reading the book was fun, sort of the way it's fun to see a movie that was shot in one's hometown, or the way it's fun (and disturbing) to see logos for McDonalds etc. in foreign countries.

Savan argues that we've gone overboard with these phrases (I think that "going overboard" is a cliche but not a pop phrase) and they make us dumber, substituting pre-cooked thoughts for orignal thoughts, substituting scripted exchanges for spontaneous interactions, and so forth. I don't have anything to say about this claim--except that I'm not sure exactly how it could be studied, and I would also consider making the opposite claim, which is that cliched thought-modules actually allow us to express more sophisticated concepts in plainer language (see here and here). She makes her argument from a politically-liberal perspective (by using pop phrases, we're acting more like passive consumers than like politically-involved citizens) but I think a similar case could be made from the conservative direction in terms of loss of traditoinal values.

As always, the important question is, How does this relate to statistics teaching

But my main point of reference when reading this book was my experience as a statistics teacher. I use these phrases in class all the time, and I enjoy when my students use them too. (The first time I ever heard "Woo-hoo" uttered by anyone other than Homer Simplson was in 1994 when a student used the phrase in my decision analysis class.) Why do I use pop phrases? They're fun to say, they help project my image as a cool, down-to-earth dude ("down-to-earth" is a cliche, I think; "dude" is pop), they make the students laugh, thus relaxing their muscles so I can cram that extra bit of statistics into them (just kidding on that one, but it is pleasant to hear them laugh; more on this below).

When pop phrases don't pop

One of the themes of Savan's book is that pop talk is so fun and powerful, it's no surprise that we do more and m ore of it. But it can backfire. Maybe simply by ticking people off, but also because many of my graduate students and colleagues are not from the United States, and they "just don't get it" sometimes. Just as our kickball references fall flat to people who were not kids in American schoolyards, similarly, those without our bakcgrounds (or just of different age groups) won't appreciate references to "identical cousins" or that episode on the Brady Bunch where Peter's voice changed. Or even many of the phrases in Savan's book. I've found even Canadians to be baffled by what we would consider fundamental pop phrases (and I'm sure I'd be baffled by theirs, too).

This really comes up when I give talks to foreign audiences. Even setting aside language difficulties and the need to speak slowly, I have to tone down my popisms. Also in other settings . . . for example, the profs in the stat dept here are almost all from other countries. Once in a faculty meeting, I responded to someone's statement with a fast, high-pitched, Eddie-Murphy-style, "Get the fuck outta here." My colleauges were offended. They didn't catch the Murphy reference and thought I was saying "Fuck" to this guy. Which, of course, I wasn't.

For a teacher, the other drawpack to pop phrases is that they can be more memorable than the points they are used to illustrate. This probably happens all the time with me. My tendency is to go for the easy laugh--and the har is set so low in a statistics class that just about any pop reference will get a laugh--without always keeping my eyes on the prize (cliche, not pop phrase, right?), which is to give the students the tools they need to be able to solve problems. (That last phrase sounds like a cliche, I know it does, but it's not!, I swear!)

Anyway, that's my point, my one suggested addition to Savan's book: pop language can actually impede conversation, get in the way of conveying meaning, when we speak to people outside the in-group. I think this will always limit the extent of pop phrases, at least for those of us who need to communicate to people from other countries.

I just found this (at Daniel Radosh's webpage) to be hilarious. Also all the links, like this and this. Actually, my favorite was this, which for convenience I'll copy in its entirety, first the picture, then the words.

Stef writes,

In the preparation of my inaugural lecture I am searching for literature on what I momentarily would call "modular statistics". It widely accepted that everything that varies in the model should be part of theloss function/likelihood, etc. For some reasonably complex models, this may lead to estimation errors, and worse, problems in the interpretation, or in possibilities to tamper with the model.It is often possible to analyse the data sequentially, as a kind of poor man's data analysis. For example, first FA then regression (instead of LISREL), first impute, then complete-data analysis (instead of EM/Gibbs), first quantify then anything (instead of gifi-techniques), first match on propensity, then t-test (instead of correction for confounding by introducing covariates), and so on. This is simpler and sometimes conceptually more defensible, but of course at the expense of fit to the data.

It depends on the situation whether the latter is a real problem. There must be statisticians out there that have written on the factors to take into account when deciding between these two strategies, but until now, I have been unable to locate them. Any idea where to look?

My reply:

One paper you can look at is by Xiao-Li Meng in Statistical Science in 1994, on "congeniality" of imputations which addresses some of these issues.

More generally, it is a common feature of applied Bayesian statistics that different pieces of info come from different sources and then they are combined. Cor example, you get the "data" from 1 study and the "prior" from a literature review. Full Bayes would imply analyzing all the data at once but in practice we don't always do that.

Even more generally, I've long thought that the bootstrap and similar methods have this modular feature. (I've called it the two-model approach, but I prefer your term "modular statistics"). The bootstrap literature is all about what bootstrap replication to use, what's the real sampling distribution, should you do parametric or nonparametric bootstrap, how to bootstrap with time series and spatial data, etc. But the elephant in the room that never gets mentioned is the theta-hat, the estimate that's getting "bootstrapped." I've always thought of bootstrap as being "modular" (to use your term) because the model (or implicit model) used to construct theta-hat is not necessarily the model used for the bootstrapping.

Sometimes bootstrapping (or similar ideas) can give the wrong answer but other two-level models can work well. For example, in our 1990 JASA paper, Gary King and I were estimating counterfactuals about electoins for Congress. We needed to set up a model for what could have happened had the electoin gone differently. A natural approach would have been to "bootstrap" the 400 or so elections in a year, to get different versions of what would have happened. But that would have been wrong, because the districts were fixed and not a sample from a larger population. We did something better which was to use a hierarchical model. iI was challenging because we had only 1 observation per district (it was important for our analysis to do a separate analysis for each year), so we estimated the crucial hierarchical variance parameter using a separate analysis of data from several years. Thus, a modular model. (We also did some missing data imputation.) So this is an example where we really used this approach, and we really needed to. Another reference on it is here.

Even more generally, I've started to use the phrase "secret weapon" to describe the policy of fitting a separate model to each of several data sets (e.g., data from surveys at different time points) and then plotting the sequence of estimates. This is a form of modular inference that has worked well for me. See this paper (almost all of the graphs, also the footnote on page 27) and also this blog entry. In these cases, the second analysis (combining all the inferences) is implicit, but it's still there. Sometimes I also call this "poor man's Gibbs".

Stef replied,

Eric Tassone sent me the this graph from a U.S. Treasury Department press release. The graph is so ugly I put it below the fold.

Anyway, it got me to thinking about a famous point that Orwell made, that one reason that propaganda is often poorly written is that the propagandist wants to give a particular impression while being vague on the details. But we should all be aware of how we write:

[The English language] becomes ugly and inaccurate because our thoughts are foolish, but the slovenliness of our language makes it easier for us to have foolish thoughts.

Tufte made a similar point about graphs for learning vs. graphs for propaganda.

Here are (some) of the errors in the Treasury Department graph:

1. Displaying only 3 years gives minimal historical perspective. This is particularly clear, given that the avg rate of unemployment displayed is from 1960 to 2005.

2. Jobs are not normalized to population. Actually, since unemployment rate is being displayed, it's not clear that anything is gained by showing jobs also.

3. Double-y-axis graph is very hard to read and emphasizes a meaningless point on the graph where the lines cross. Use 2 separate graphs (or, probably better, just get rid of the "jobs" line entirely).

4. Axes are crowded. x-axis should be labeled every year, not every 2 months. y-axis could just have labels at 4%, 5%, 6%. And if you want to display jobs, display them in millions, not thousands! I mean, what were they thinking??

5. Horizontal lines at 129, 130, etc., add nothing but clutter.

To return to the Orwell article, I think there are two things going on. First, there's an obvious political motivation for starting the graph in 2003. And also for not dividing jobs by population. But the other errors are just generic poor practice. And, as Junk Charts has illustrated many times, it happens all the time that people use too short a time scale for their graphs, even when they get no benefit from doing so. So, my take on it: there are a lot of people out there who make basic graphical mistakes because they don't know better. But when you're trying to make a questionable political point, there's an extra motivation for being sloppy. This sort of graph is comparable to the paragraphs that Orwell quoted in "Politics and the English Language": the general message is clear, but when you try to pin down the exact meanings of the words, the logic becomes less convincing.

OK, here's the graph:

Stef van Buuren, Jaap Brand, C.G.M. Groothuis, and Don Rubin wrote a paper evaluating the "chained equations" method of multiple imputation--that is, the method of imputing each variable using a regression model conditional on all the others, iteratively cycling thorugh all the variables that contain missing data. Versions of this "algorithm" are implemented as MICE (which can be downloaded directly from R) and IVEware (a SAS package). (I put "algorithm" in quotes because you still have to decide what model to use--typically, what variables to include as predictors--in each of the imputation steps.)

Here's the paper, and here's the abstract:

The use of the Gibbs sampler with fully conditionally specified models, where the distribution of each variable given the other variables is the starting point, has become a popular method to create imputations in incomplete multivariate data. The theoretical weakness of this approach is that the specified conditional densities can be incompatible, and therefore the stationary distribution to which the Gibbs sampler attempts to converge may not exist. This study investigates practical consequences of this problem by means of simulation. Missing data are created under four different missing data mechanisms. Attention is given to the statistical behavior under compatible and incompatible models. The results indicate that multiple imputation produces essentially unbiased estimates with appropriate coverage in the simple cases investigated, even for the incompatible models. Of particular interest is that these results were produced using only five Gibbs iterations starting from a simple draw from observed marginal distributions. It thus appears that, despite the theoretical weaknesses, the actual performance of conditional model specification for multivariate imputation can be quite good, and therefore deserves further study.

Here are Stef's webpages on multiple imputation. Multiple imputation was invented by Don Rubin in 1977.

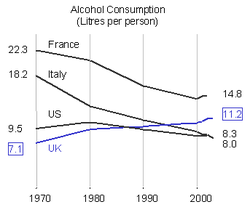

Bad graph:

As is often the case in these situations, the good graph takes up less space, is easier to understand, and is easier to construct.

P.S. I think the "good graph" could be made even better by labeling the y-axis using round numbers. I don't think the exact numbers as displayed there are so helpful. Also, I'd convert to a more recognizable scale. Instead of liters per year, perhaps ounces per day, or the equivalent of glasses of wine per week?

Gregor Gorjanc writes,

My colleague has biological data, which measures degree of DNA damage in cells (Olive Tail Moment - OTM). This data are gathered via so called comet assay test and used to detect genotoxicitiy of various chemicals, environmental waters, soil, ... The test (this is very imprecise description, but should show the point) is conducted in such a way that say we take blood sample from 10 animals, where first 5 animals are under treatment of interest and the other five are used for control. Specified type of cells is extracted from blood sample, "processed" and finally a set of those cells (usually around 100) is scored for OTM.

Our Columbia colleague Andy Nathan recently wrote a review of a recent biography of Mao. My interest here lies not so much in the subject matter (important though it is) but rather in Nathan's comments about historical research methods, in particular, for the key issue of what can or should be believed.

I don't need art to be work-related. In fact, I generally prefer that it's not. But there's an exhibition at MOMA called SAFE: Design Takes On Risk, that looks pretty cool. Items range from practical (chairs with well-placed hooks to hide a purse) to pseudo-practical (suitcase-like containers to keep bananas from getting bruised) to borderline neurotic (slip-on fork covers). And earplugs. Lots of earplugs. For those who don't live in the city or just don't want to shell out the $20 entrance fee, there's an online exhibition: http://moma.org/exhibitions/2005/safe/.

I recently met Carlos Davidson, a prof at Cal State University. He studies amphibians, with a special interest in why frogs in California are disappearing. He said that he can "predict quite well whether a site will have frogs, based on the pesticide use upwind" and that he thinks that pesticides are a big part of the problem. But he also said that others in his field are far from convinced. What should it take to be convincing? Is there a "statistical" answer to questions like, which is more important: lab work, more field work, more analysis of existing field data (perhaps with more covariates included)?

Last week I substitute professed a mathematical statistics course for a friend who was out of town. I was sort of dreading it: interpretation of confidence intervals, Fisher information, AND hypothesis tests, all in one class, less than 24 hours before the start of Thanksgiving break. I didn't have high hopes for the enthusiasm level in the room. BUT it was actually pretty fun. The Cramer-Rao inequality? It's really cool that there's a derivable bound on the variance of an unbiased estimator, and even cooler that that bound happens to be the inverse of the Fisher information. It's not the kind of stuff that comes up much in my own work or that I'd want to do research on myself, but I got a kick out of teaching it.

During my visit to George Mason University, Bryan Caplan gave me a draft of his forthcoming book, "The logic of collective belief: the political economy of voter irrationality." The basic argument of the book goes as follows: