Allen Hurlbert writes:

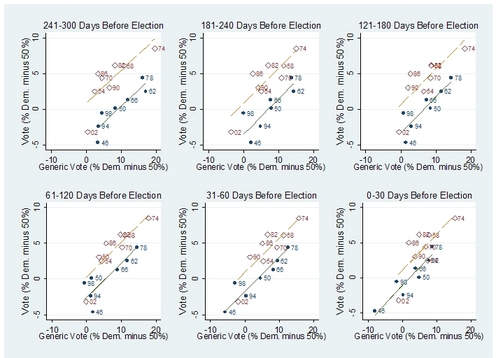

I saw your 538 post [on the partisan allegiances of sports fans] and it reminded me of some playful data analysis I [Hurlbert] did a couple months ago based on NewsMeat.com's compilation of sports celebrity campaign contributions. Glancing through the list I thought I noticed some interesting patterns in the partisan nature of various sports, so I downloaded the data and created this figure:

Recent Comments