A finance professor writes,

I am currently working on a project and am looking for a test. Unfortunately, none of my colleagues can answer my question. I have a series of regressions of the form Y= a + b1*X1 + b2*X2. I am attempting to test whether the restriction b1=b2 is valid over all regressions. So far, I have an F-test based on the restriction for each regression, and also the associated p-value for each regression (there are approximately 600 individual regressions). So far, so good.Is there a way to test whether the restriction is valid "on average"? I had thought of treating the p-values as uniformy distributed and testing them against a null hypothesis that the mean p-value is some level (i.e. 5%).

I figure that there should be a better way. I recall someone saying that a sum of uniformly distributed random variates is distribted Chi-squared (or was that a sum of squared uniforms?). In either case, I can't find a reference.

My response: if the key question is comparing b1 to b2, I'd reparameterize as follows:

y = a + B1*z1 + B2*z2 + error, where z1=(X1+X2)/2, and z2=(X1-X2)/2. (as discussed here)

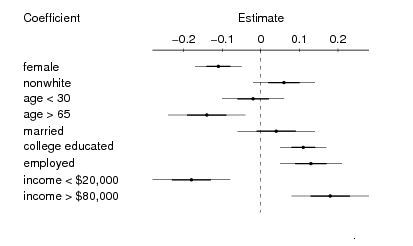

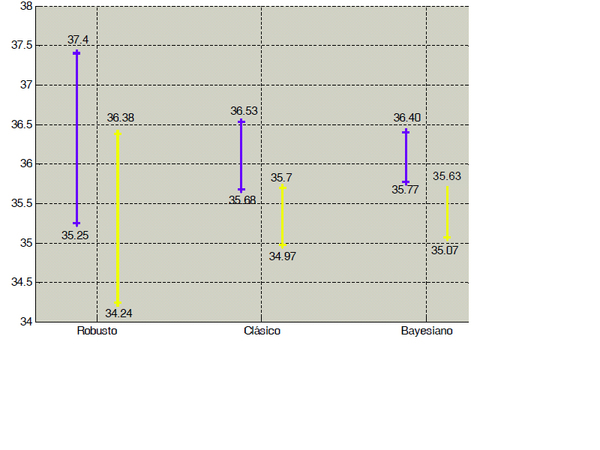

Now you're comparing B2 to zero, which is more straightforward--no need for F-tests, you can just look at the confidence intervals for B2 in each case. And you can work with estimated regression coefficients (which are clean) rather than p-values (which are ugly).

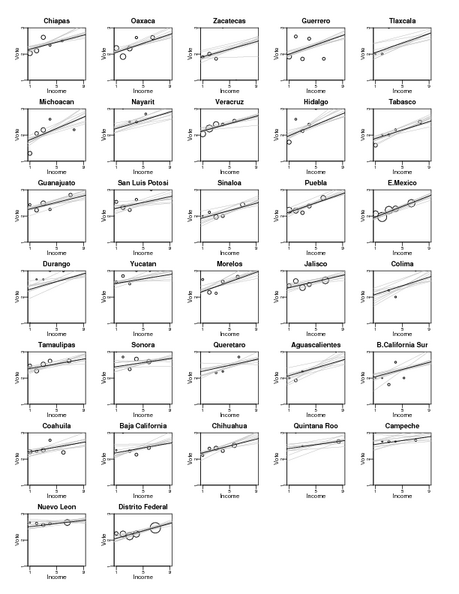

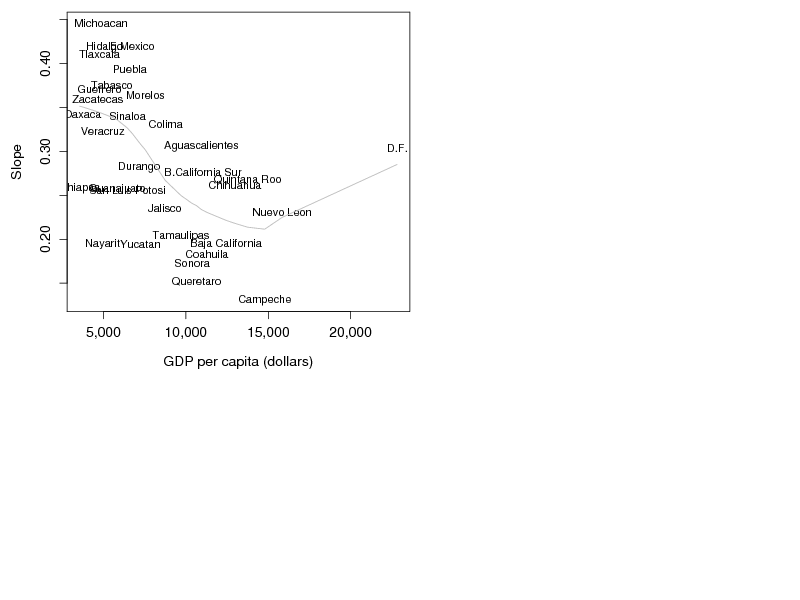

At this point I'd plot the estimates and se's vs. some group-level explanatory variable characterizing the 600 regressions. (That's the "secret weapon.") More formal steps would include running a regression of the estimated B2's on relevant group-level predictors. (Yes, if you have 600 cases, you certainly must have some group-level predictors.) And the next step, of course, is a multilevel model. But at this point I think you've probably already solved your immediate problem.

Recent Comments