I’m pleased as Punch to announce our new paper,

- Nawaf Bou-Rabee, Bob Carpenter, and Milo Marsden. 2024. GIST: Gibbs self-tuning for locally adaptive Hamiltonian Monte Carlo. arXiv 2404.15253.

We followed the mathematician alphabetical author-ordering convention.

The basic idea

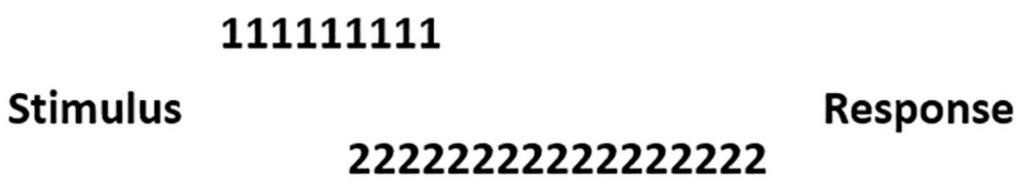

The basic idea is so simple, I’m surprised it’s not more popular. The general GIST sampler couples the HMC algorithm tuning parameters (step size, number of steps, mass matrix) with the position (model parameters) and momentum. Each iteration of the Markov chain, we resample momentum using a Gibbs step (as usual in HMC), then we sample the tuning parameters in a second Gibbs step, conditioning on the current position and resampled momentum. The proposal is generated by the leapfrog algorithm using the sampled tuning parameters. The accept/reject step then uses the ratio of the joint densities, which now includes the tuning parameters. Of course, we need to specify a conditional distribution of tuning parameters to make this concrete.

The same idea could be applied to other Metropolis samplers.

Some prior art

We were inspired by a combination of NUTS and some negative results from Nawaf regarding acceptance bounds in delayed rejection generalized HMC (more on that later).

Radford Neal used the coupling idea for the Metropolis acceptance probability in his recent paper Non-reversibly updating a uniform [0,1] value for Metropolis accept/reject decisions. This is for generalized HMC, which is one-step HMC with partial momentum refresh. The partial refresh means the momentum flips in HMC matter and will typically thwart directed movement. Neal’s appraoch groups the acceptances together so that generalized HMC can make directed movement like HMC itself. Although this easily fits within the GIST framework, the proposal for acceptance probability doesn’t depend on the current position or momentum.

We (Chirag Modi, Gilad Turok and I) are working on a follow-up to my recent paper with Chirag and Alex Barnett on delayed rejection HMC. The goal there was to properly sample multiscale and varying scale densities (i.e., “stiff” Hamiltonians). In the follow up, we’re using generalized HMC with delayed rejection rather than Radford’s coupling idea, and show it can also generate directed exploration. The paper’s drafted and should be out soon. Spoiler alert! DR-G-HMC works better than DR-HMC in its ability to adjust to local changes in scale, and it’s competitive with NUTS in some cases where there are varying scales, but not in most problems.

Algorithms that randomize the number of steps (or stepsize), like those in Changye Wu and Christian Robert’s Faster Hamiltonian Monte Carlo by Learning Leapfrog Scale, can also be seen as an instance of GIST. From this view, they are coupling the number of steps and sampling that number from a fixed uniform distribution each iteration. This doesn’t condition on the current position. The general framework immediately suggests biasing those draws toward longer jumps, for example by sampling uniformly from the second half of the trajectory.

Sam Livingstone was visiting Flatiron Institute for the last couple of weeks, and when I ran the basic idea by him, he suggested I have a look at the paper by Chris Sherlock, Szymon Urbas, and Matthew Ludkin, The apogee to apogee path sampler. This is a really cool idea that uses U-turns in potential energy (negative log density), which is univariate, rather than in position space. They evolve the Hamiltonian forward and backward in time for a given number of U-turns in log density, and then sample from among the points on the path (like NUTS does). One issue is that a regular normal distribution on momentum can have trouble sampling through the range of log densities (see, e.g., Sam et al.’s paper on kinetic energy choice in HMC). Because we were going to be discussing his paper in our reading group, I wrote to Chris Sherlock and he told me they have been working on the apogee to apogee idea since before NUTS was released! It’s a very similar idea, with similar forward/backward in time balancing. The other idea it shares with NUTS is a biasing toward longer jumps—this is done in a really clever way that we can borrow for GIST. Milo and Nawaf figured out how NUTS and the apogee-to-apogee sampler can be fit into the GIST framework, which simplifies their correctness proofs. Once you’ve defined a proper conditional distribution for GIST, you’re done. The apogee-to-apogee paper is also nice in evaluating a randomized stepsize version of HMC they call “blurred” HMC (and which of course, fits into the general GIST framework the same way the Wu and Robert sampler does).

If you know of other prior art or examples, we’d love to hear about them.

Our concrete alternative to NUTS

The alternative to NUTS we discuss in the GIST paper proposes a number of steps by iterating the leapfrog algorithm until a U-turn, then randomly sampling along the trajectory (like the improvement to the original NUTS that Michael Betancourt introduced or the apogee-to-apogee sampler). We then use a crude biasing mechanism (compared to the apogee-to-apogee sampler or NUTS) toward longer paths. That’s it, really. If you look at what that means for evaluating, we have to run a trajectory from the point sampled backwards in time until a U-turn—you don’t really get away from that forward-and-backward thing in any of these samplers.

We evaluate mean-square jump distance, rejections, and errors on parameter estimates and squared parameter estimates. It’s a little behind NUTS’s performance, but mostly in the same ballpark. In most cases, the variance among NUTS runs was greater than the difference between the mean NUTS and new algorithm run times. The evals demonstrate why it’s necessary to look at both parameter and squared parameter estimates. As draws become more anti-correlated, which happens by maximizing expected square jump distance, estimates for parameters improve, but error goes way up on estimates of squared parameters. I provide an example in this Stan forum post, which was inspired by a visit with Wu and Robert to discuss their randomized steps algorithm. Also, before we submit to a journal, I need to scale all the root mean-square-error calculations to Z scores.

What we’re excited about here is that it’s going to be easy to couple step size adaptation. We might even be able to adapt the mass matrix this way and get a cheap approxmation to Riemannian HMC.

Try it out

The evaluation code is up under an MIT License on my GitHub repo adaptive-hmc. I’m about to go add some more user-facing doc on how to run the evaluations. I’m really a product coder at heart, so I always find it challenging to hit the right level of doc/clarity/robustness/readability with research code. To get log densities and gradients from Stan models to develop our algorithm, we used BridgeStan.

I’m always happy to get suggestions on improving my code, so feel free to open issues or send me email.

Thank you!

This project would’ve been much harder if I hadn’t gotten feedback on the basic idea and code from Stan developer and stats prof Edward Roualdes. We also wouldn’t have been able to do this easily if Edward hadn’t developed BridgeStan. This kind of code is so off-by-one, numerator-vs-denominator, negative vs. positive, log vs. exponent mistake prone, that it makes my head spin. It doesn’t help that I’ve moved from R to Python, where the upper bounds are exclusive! Edward found a couple of dingers in my original code. Thanks also to Chirag for helping me understand the general framework and on the code. Both Edward and Chirag are working on better alternatives to our simple alternative to NUTS, which will be showing up in the same adaptive HMC repo—just keep in mind this is all research code!

What’s next?

Hopefully we’ll be rolling out a bunch of effective GIST samplers soon. Or even better, maybe you will…

P.S. In-person math and computing

The turnaround time on this paper from conception to arXiv is about the fastest I’ve ever been involved with (outside of simple theoretical notes I used to dash out). I think the speed is from two things: (1) the idea is easy, and (2) Milo, Nawaf and I spent four days together at Rutgers about a month ago working pretty much full time on this paper (with some time outs for teaching and talks!). We started with a basic idea, then worked out all the theory and developed the alternative to NUTS and tried some alternatives over those four days. It’s very intense working like that, but it can be super productive. We even triple coded on the big screen as we developed the algorithm and evaluated alternatives. Then we divided the work of writing the paper cleanly among us—as always, modularity is the key to scaling.